Hidden Markov Model (HMM) for Jarvis Word

HIDDEN MARKOV MODDEL FOR JARVIS WORD

MEF University

(May 2020)

Abstract — This article introduces what a Hidden Markov Model (HMM) is, how to create it, how it can be used in speech recognition, and the HMM created for recognition of the word 'Jarvis'. Hidden Markov Model is a statistical Markov model. This system being modeled is assumed to be a Markov process with unobserved states. Hidden Markov models are especially used for speech recognition handwriting, gesture recognition, score following and bioinformatics. Sequence Model creates an output for each unit in the sequence given as input. It is even a Probabilistic Sequence Model. That is, it calculates a probability for each possible output and selects the most probable. In the designed HMM, the Viterbi algorithm was used to train the dataset. Also the Forward algorithm was used to decide if the word was the desired word. Furthermore the multivariate gaussian distribution was used to find emission probability with mean value and covariance matrix for training. The best path and the best variables which are transition probability (A) and emission probability (B) were computed.

Index Terms — Hidden Markov Model, Markov Chain, Viterbi, Speech recognition, Multivariate Gaussian Distribution

I. INTRODUCTION

Speech recognition is an interdisciplinary subfield of computational linguistics that develops methodologies and technologies that computer software program devices with the ability to decode the human voice [1]. Speech recognition is widely used to operate a device, perform commands, or write without having to use any devices like a keyboard, mouse, or press any buttons. Raj Reddy was the first person to take on continuous speech recognition as a graduate student at Stanford University in the late 1960s [2]. Previous systems required the users to make a pause after each word. This system is designed to issue commands used when playing a chess game. Hidden Markov models (HMM) are good models for the analysis of sequential data, biological sequences and speech recognition.

Hidden Markov models are statistical models that are closely related, as the name already suggests, to Markov models. In contrast to Markov models, where the states are directly visible, the states are not directly visible in the case of HMM [3]. In other words, each hidden state holds different probability distributions that produce observable output. When applying the hidden Markov model , a multivariate Gaussian emission distribution in each hidden state is assumed. This is essentially since there are efficient parameter estimators for this special case. This distribution assumption, however, does not hold true for many data sets Hidden Markov models can be viewed as an extension of Markov chains. The only difference compared to common Markov chains is, that the state sequence corresponding to an observation sequence is not observable but hidden. In other words, observation is a probabilistic function of the state, and the underlying sequence of states itself is a latent stochastic process. This means that the sequence of states can only be observed indirectly through another stochastic process that emits an observable output. The mean of stochastic is assumed depending on the current state to future states.

II. HIDDEN MARKOV MODEL

A. Components of HMM

Q = q1q2 ...qn; a set of N states,

A = a11 ...ai j ...ann; a transition probability matrix A, each ai j representing the probability of moving from state i to state j,

O= o1o2 ...oT: a sequence of T observations, each one drawn from a vocabulary V = v1, v2,..., vn

B = bi(ot); a sequence of observation likelihoods, also called emission probabilities, each expressing the probability of an observation not being generated from a state i,

Pi= pi1,pi2,...,pin; an initial probability distribution over states. pi is the probability that the Markov chain will start in state i. Some states j may have pj = 0, meaning that they cannot be initial states.[7]

B. Markov Chain

The Markov chain is a simple concept which can explain most complicated real time processes. Speech recognition and text identifiers use this simple principle called Markov chain in some form. The Markov chain is based on a principle of “memoryless”. In other words, the next state of the process only depends on the previous state and not the sequence of states. States before the present state have no influence on the future except the present state. This simple assumption makes the calculation of conditional probability easy and enables this algorithm to be applied in several scenarios [3].

The left to right Markov chain shown in Figure 1 was used in this design. In this model states repeat their own state or pass the next state, not pass to other states. The system is described by N=6 distinct states: S1,S2 ...SN. States are defined as; S1: ‘J’, S2: ‘A’, S3: ‘R’, S4: ‘V’, S5: ‘I’, S6: ‘S’.

Figure 1. The left to right Markov chain

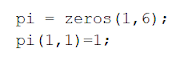

C. Likelihood Computing likelihood; given an HMM λ = (A,B) and an observation sequence O, determine the likelihood P(O|λ). There are two methods to calculate this: the Forward and the Backward Algorithm [5]. The Forward algorithm has three steps: Initialization, Recursion, Termination. α1(i)=pi.bi(O1) equation means that the first forward variable is calculated by multiplying the initial probability of state i by the emission probability b of that state given the observable O at time 1.[5] Recursion equation shown in Figure 2 defines the forward variable of state j as the product of the previous forward variable of state i, multiplied by the transition probability a between the previous state i to state j, multiplied by the emission probability b from state j to the observable O. Figure 2. Recursion Equation[5] Termination equation shown in Figure 3 tells us to find the probability of an observation sequence O deriving from an HMM model λ. The Backward Algorithm looks like a Forward algorithm, but it goes backward in time. It is a good test to show that the Forward algorithm works proficiently. Figure 3. Termination Equation[5] D. Decoding HMM models contain hidden variables. The task of determining which sequence of variables is the underlying source of some sequence of observations is called the decoding task. Decoding problems helps to find the best sequence [6]. HMM decoding algorithm is the Viterbi algorithm. Viterbi is a kind of dynamic programming. The Viterbi algorithm has similar steps like forward algorithm: Initialization, Recursion and Termination and Backtracking. This step finds the sequence of hidden states. Viterbi initialization equation shown in Figure 4 is the same as the initialization equation of the Forward Algorithm. Figure 4. Initialization[6] In the Viterbi find the maximum value among the multiplication results and assign that to the new Viterbi variable. Recursion equations shown in Figure 5. Figure 5. Recursion[7] Termination equation shown in Figure 6 represents the probability of the entire state sequence up to point T + 1. Find the maximized value of all the variables of every state at the end of the observation sequence. Figure 6. Termination[6] E. Learning We have observation sequence O and the set of possible states in the HMM, find the HMM parameters A and B. HMM training algorithm is the forward-backward shown in Figure 7 or Baum-Welch algorithm. The algorithm helps us train both the transition probabilities A and the emission probabilities B of the HM. Figure 7. The Forward-Backward Algorithm for Training[7] III. MULTIVARIATE GAUSSIAN DISTRIBUTION The Gaussian distribution is the most common and easily analyse distribution. A vector variable has a Multivariate Gaussian distribution. The purpose of this distribution is to find emission probability (B) with mean values and covariance matrix. Probability density function with this form is shown in equation 1: f(x|μ, Σ) = 1/(2π)d/2|Σ|1/2.exp ( −1/2.(x − μ) T .Σ−1 (x − μ)) (1) μ is a mean vector, Σ is a covariance matrix. The covariance matrix Σ is the expectation of the deviation of x from the mean in equation 2: Σ = E[(x − μ)(x − μ)T] (2) The possibility of that observation in the current state shown in equation 3: bj(ot)= 1/(2π)d/2|Σ|1/2.exp( −1/2.(ot − μj) T .Σj−1 (ot − μj)) (3) IV. EXPERIMENTAL RESULTS ‘Jarvis’ voice templates were recorded with 16 kHz. Each recorded audio has 14 MFCC features with different lengths varying by length of records. The states and observations were defined. The algorithm of states and observations shown in Figure 8 and Figure 9. Figure 8. The implementation of observation list We implemented the observation list by using the initialize observation list function. In the first part, we seperate the observations equally. After updating A,B matrices, we updated the observation list and we got the final observation list. We use the function of figure 9 for updating the observation list and states. Figure 9. The implementation of states Initial probability (π) is defined as a 1x6 vector. First value of this vector, π (1,1), is 1 and other values are 0. Because systems must start from the first state. The initial probability shown in Figure 10. Figure 10. The implementation of initial probability Figure 11. The updated transition matrix The transition matrix is the matrix that records the rate of transitions between states. While this matrix is being updated shown in Figure 11, each new incoming data value is included in the process. For example, point A (1,1) is the rate of staying in state1 while in state1. When calculating this, the formula count (state 1 to state1) / (count (state 1 to state1) + count (state 1 to state 2)) is used. V. CONCLUSION In conclusion, we trained a model which have 6 states.This jarvis model keeps the stable observation list and best probable path that will occur when we say jarvis. The left-to-right HMM was used while training this model. In the HMM, the Viterbi Algorithm was used for updating our values. After the 5 train, we get the optimum observation list values and best probable path. When we compare it to 100 observation data, state 1 has 13 observation , state 2 has 20 observation , state 3 has 13 observation , state 4 has 19 observation , state 5 has 20 observation , and state 6 has 15 observation REFERENCES [1] Al Smadi, Prof-Takialddin, ''Automatic detection technique for voice quality interdisciplinary methodologies'', Journal of Advanced Sciences and Engineering Technologies(JASET). 1. 1-6. 10.32441/jaset.v1i1.54. , 2018. [2] Payende, N., Gvahami M., ''Designing an intelligent translation software by audio processing techniques'', World Essays Journal / 3 (2): 129-140, 2015. [3] Pfundstein, Georg. “Hidden Markov Models with Generalised Emission Distribution for the Analysis of High-Dimensional, Non-Euclidean Data.” 2011. [4] Ege,”Hidden Markov Models(Saklı Markov Modeli)”,Oct 9, 2019, Retrieved from; https://medium.com/@egealpay1/hidden-markov-models-sakl%C4%B1-markov-modeli-b381380d0aca [5] Maria Burlando, "Hidden Markov Models — Part 1: the Likelihood Problem", Dec 29, 2018, Retrieved from; https://medium.com/@Ayra_Lux/hidden-markov-models-part-1-the-likelihood-problem-8dd1066a784e. [6]Maria Burlando, "Hidden Markov Models — Part 2: the Decoding Problem", Jan 3, 2019, Retrieved from; https://medium.com/@Ayra_Lux/hidden-markov-models-part-2-the-decoding-problem-c628ba474e69. [7] Daniel Jurafsky & James H. Martin, "Speech and Language Processing: An introduction to natural language processing,computational linguistics, and speech recognition", October 2, 2019. [8] Mark Stamp, "A Revealing Introduction to Hidden Markov Models", October 17, 2018. |

Comments

Post a Comment